Snapshot of the load ap.space sees from AI crawlers

-

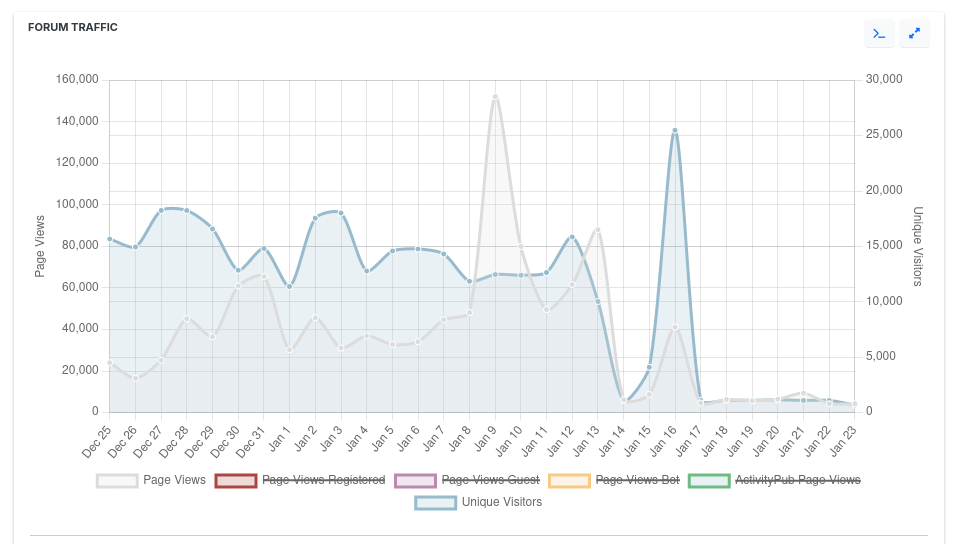

Can you guess when I turned Anubis back on?

- Grey line (left-hand; y-axis) tracks page views

- Blue line (right-hand; y-axis) tracks unique users

You can even see the spike in traffic that brought down the site hard enough that I got my butt in gear to tune Anubis and turn it back on.

Based on the numbers here, there is a thirteen-fold decrease in activity (or a ~92% drop in traffic), all identified by Anubis as bots and blocked.

Selective adjustments were made to the nginx config and anubis bot policy to allow certain bits of traffic through (for unimpeded federation, etc.), but otherwise the site is now quite stable, on a small potato server.

Default bot policy does let search engine crawlers though (I think), so that is win-win.

Thank you so much @cadey@pony.social, my next stop is your GitHub Sponsors page.

-

J julian@community.nodebb.org shared this topic

J julian@community.nodebb.org shared this topic

-

@julian We’re approaching a version of the Terminator universe where site access will have to be restricted to in-person verified human beings while AI agents scan surveillance cameras and phone traffic to identify humans with access to target sites and deepfake them to obtain access credentials.

Our only hope may be the Gibson Sprawl end where the AIs cease to give a shit about humanity and just talk to each other.